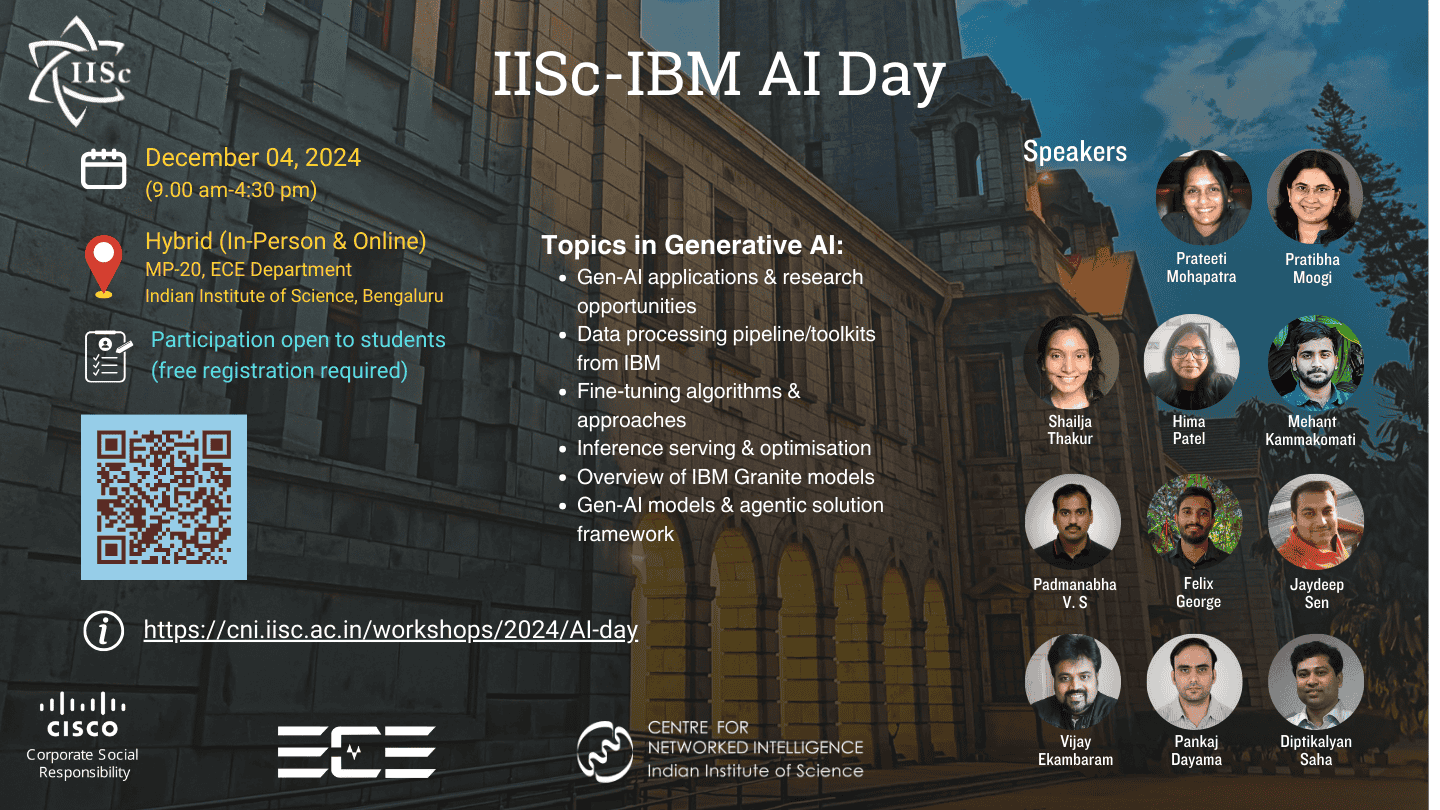

One-day workshop on topics in Generative AI

IISc-IBM AI Day is being jointly organized by the Centre for Networked Intelligence (with support from Cisco CSR) and IBM-IISc Hybrid Cloud Lab, in collaboration with IBM India Research Lab.

The goal of this workshop would be to apprise the audience of Generative AI, a set of toolkits from IBM for GenAI, and various LLMs from IBM for diverse applications.

🗓️ Date

December 04, 2024 (9am-4:30pm)

📍 Venue

In-person: Room MP-20, ECE Department, Indian Institute of Science Campus, Bengaluru

(📌 Map location)

Online: link will be shared with registered participants.

👪 Target audience

The workshop is targeted at engineering/science students (pursuing graduate/undergraduate level educational program in India), keen on learning the application of Gen-AI in diverse domains and building systems for Gen-AI.

🗒️ Schedule, topics, speakers, and learning objectives

| Time | Topic | Speaker |

|---|---|---|

| 9:00-9:30am | Registration | |

| 9:30am | Welcome note | |

| 9:35-10:15am | Gen AI applications and research opportunities in IT automation | Prateeti Mohapatra & Pratibha Moogi |

| 10:15-11:00am | Data processing pipeline/toolkits from IBM for Gen AI models development | Shailja Thakur & Hima Patel |

| Break | ||

| 11:20-12:05pm | Fine-tuning algorithms/ approaches | Mehant Kammakomati & Padmanabha V. S. |

| 12:05-12:50pm | Inference serving & optimization approaches | Felix George |

| Lunch break | ||

| 2:15-3:30pm | IBM Granite models: code, language, and timeseries | Jaydeep Sen, Vijay E, & Pankaj Dhayama |

| Break | ||

| 3:45-4:30pm | Gen AI models and agentic solution framework | Diptikalyan Saha |

👥➕ Registration

In-person participation

Thank you for an overwhelming interest in attending the AI Day in-person. Due to logistical reasons, we have limited slots for in-person participation, which are taken up, and we are not taking any new registrations now.

Among those who registered, unfortunately we've been able to confirm the registration for a few. For those who we could not accommodate for in-person participation, we have sent online meeting details to join online.

Online participation

Registration for online participation is closed now.

We have emailed online meeting details to all registered participants. Please check your spam folder too. If you haven't received an email from outreach.cni@iisc.ac.in with online meeting link, please email outreach.cni@iisc.ac.in.

Learning objectives

Gen AI Applications and Research opportunities

As we see the world shifting from traditional ways of learning from data to more on using the Generative computing paradigm, this talk briefly touches upon the applications and research opportunities in this space. It touches upon key aspects one must consider while building Generative AI e2e capabilities to serve a given purpose with an underlying business goal.Data Processing Pipeline/Toolkits from IBM for Gen AI models development

Building robust and reliable Large Language Models (LLMs) hinges on the quality of the data used for training. This talk delves into the critical role of data quality in LLM development, particularly for code generation tasks. We will discuss the challenges posed by low-quality data and explore state-of-the-art techniques for data preparation. The session will then provide a hands-on demonstration of building data pipelines using the Data Prep Kit, an open-source library. Participants will learn to construct and execute data processing pipelines, clean and filter data, and prepare it for fine-tuning LLMs. By the end of this session, participants will be equipped to build effective data pipelines for their own LLM projects.Fine-tuning Process / Approaches

Performance requirements from a Large Language Model (LLM) depend on the use case. Some use cases need specialization of the LLM in a specific domain, which means the performance bar for the LLM is much higher compared to other domains. To achieve this objective, the LLM needs to be fine-tuned to the specific domain. Participants will learn the requirements of a fine-tuning process, and the software stack that could facilitate fine-tuning. We will also cover the various fine-tuning techniques available to perform efficient fine-tuning.Inference Serving & Optimisation approaches

LLM (Large Language Model) inferencing refers to the process of using a pre-trained model to generate outputs or make predictions based on new, unseen data. The efficiency of LLM inferencing is influenced by factors like model size, computational resources, and optimization techniques, with a focus on ensuring fast, accurate, and resource-efficient predictions. This process is critical for deploying LLMs in real-world applications, where they can assist in a wide range of tasks, from chatbots, and content creation tools to AI Agents. In this session, we discuss the evolution of inference engines, optimisation for performance and power, and monitoring of LLM inferencing engines.Overview of IBM LLMs - Granite models for Code & Language, Time-series models

This session will touch upon a. evolution of LMs to Large Language Models: Scaling laws, in-context-learning, Instruction Tuning. b. Working with LLMs: Example Demonstrations, c. Use-cases of LLM: RAG applications, as well as a brief intro to IBM LLMs: Granite series for code & language, and time-series models.Gen AI Models and Agentic Solution Framework

AI agents have gained substantial attention in recent years due to the surge in AI capabilities and the demand for automation in complex tasks. AI agents' ability to reason, plan, act, and interact represents a significant advancement over traditional automation techniques. This talk will provide theoretical insights into AI agents' properties and frameworks like LangChain and LangGraph and have practical demonstrations of how they can be applied within the software engineering domain, such as test case generation, program repair, and application understanding.

✉️ Contact

Please email outreach.cni@iisc.ac.in with the subject "AI Day" for any queries.

Sponsors

Supported by